Delivering a Conversational AI Avatar from PoC to Live Use

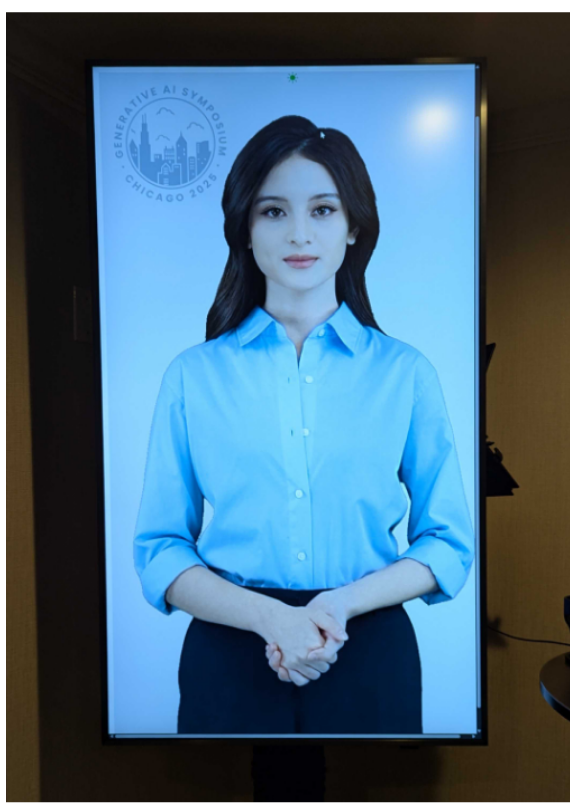

As our company prepared to host the GenAI Symposium 2025 in Chicago, we were also hard at work developing Jaine, our AI-powered animated avatar. Because the GenAI Symposium would be a gathering of clients, partners, and internal experts from all over the world, Jaine would serve as a real-time, multilingual voice assistant—greeting guests and answering questions to enhance the event experience.

Key Benefits

Avatar launched on the main day of the GenAI Symposium, supporting real-time conversations in multiple languages.

Stayed live for 9+ hours with <3s average latency and consistently high engagement.

Handled questions on session times, directions, company offerings, and casual, friendly exchanges.

Admin tooling and observability—including Langfuse—enabled fine‑tuned control, ensuring reliability and responsiveness.

Clients and internal staff praised the avatar’s natural tone, quick responses, and upbeat, short-form engagement.

Served as a compelling visual proof of the company’s AI capabilities, positioning the company as an innovator in human‑centered AI, not just a software vendor.

The Challenge

Designing a video and voice-based AI assistant for a live, multi-day event came with a series of technical and experiential challenges:

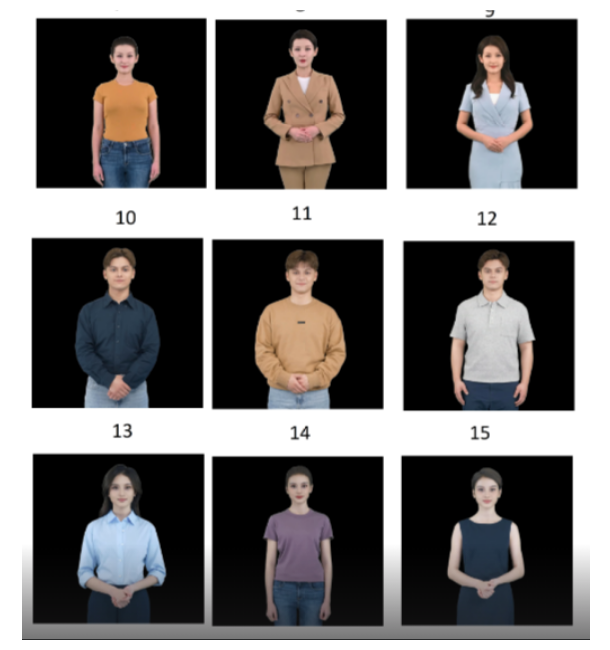

- Defining the Persona: While there was initial interest in using the likeness of a company executive or spokesperson, the idea was ultimately rejected on ethical grounds. The team voted in favor of a stylized digital persona that could represent the company’s voice without raising privacy or bias concerns.

- Training for Conversation: The avatar needed to respond within two-three seconds to spoken queries and operate entirely through voice, with no keyboard or touchscreen interaction. On top of those requirements, it needed to switch naturally between English, German, French, Russian and Spanish, depending on the user’s language, while also maintaining a conversational tone that was both professional and friendly.

- Adhering to Security and Compliance:The system needed to comply with GDPR, avoid storing personal data, and reset after inactivity to reduce screen clutter and cloud costs.

- Maintaining Visual Excellence: Equally important was the need to present a visual experience that would impress clients—a high-resolution, fluidly animated avatar that reflected the company’s technical capabilities.

The Solution

Through close collaboration across AI, engineering, design, and event support teams, the avatar project became a reality. Powered by a natural voice interface and animated in real time, the system we designed interpreted and classified spoken queries, retrieved relevant answers from optimized knowledge bases, and delivered concise responses in a human-like tone.

To orchestrate the multi-step query processing and maintain modularity across tools, the team employed ARGO, First Line Software’s proprietary framework for agentic RAG implementations. ARGO enabled a structured, modular environment to manage the avatar’s decision-making flow—coordinating intent classification, tool routing, and grounded response generation. This allowed the system to maintain responsiveness and consistency even in the unpredictable conditions of a live event, ensuring both flexibility and reliability at scale.

The following sections describe how the avatar was architected, built, and tested for deployment in a live, high-traffic event environment.

Implementation

Technology Stack & Architecture

The core of the solution involved Azure AI services, and specifically, Azure Speech Service with the avatar feature and LangGraph-based conversation agent.

To convert user speech into text in real time, we used an Azure Speech Service, which was configured to recognize speech in five input languages and also distinguish speakers based on their voice and tone. After we successfully processed user utterance, the resulting text was passed to the conversation agent.

GPT-4o-mini model, provided through the Azure AI Services resource, served as the main conversation engine to deal with user queries in text format.

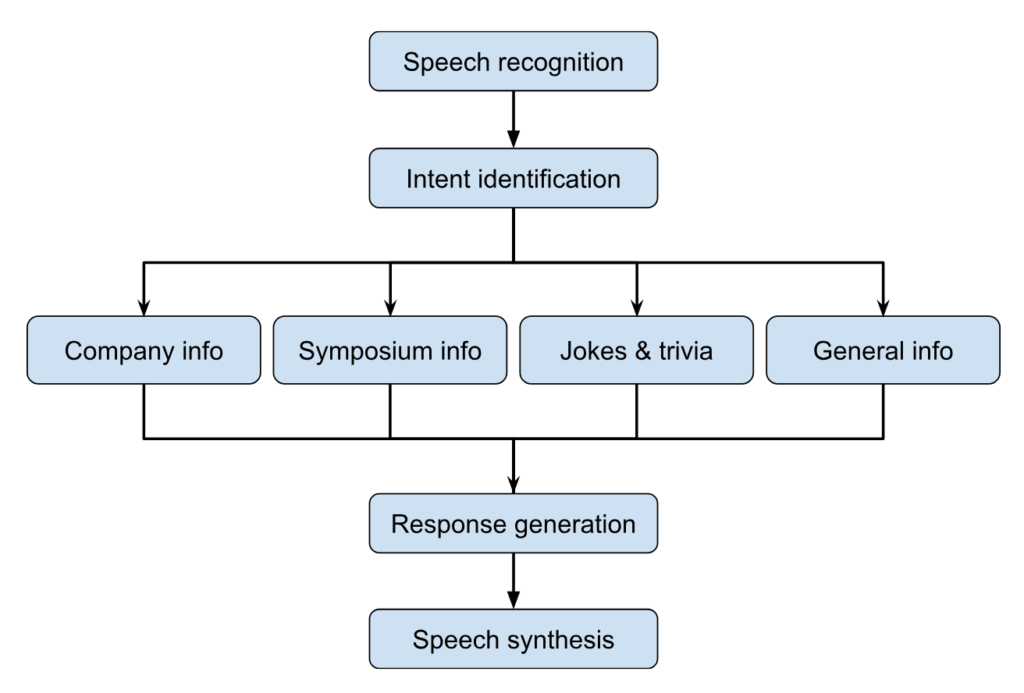

As a first step, the intent of a user has been identified and then routed to the corresponding agent tool such as company info tool, symposium info tool, jokes and general knowledge.

As a second step, the actual answer generation took place according to the tool logic. In case of company info, symposium info and jokes tools, a RAG approach was taken in order to generate grounded replies.

As soon as a response was generated, it was passed to the final step: synchronized speech generation.

The Avatar feature of Azure Speech Service completed this job in real time to create a feeling of an authentic, human-like conversation.

The entire system was built with cost and performance in mind. Speech synthesis and avatar rendering were optimized to ensure the assistant could scale without generating excessive cloud costs. Auto-reset and auto-shutdown protocols were implemented to handle inactivity gracefully and economically.

Moreover, the implemented solution facilitated the deployment of multiple autonomous avatars, each featuring customizable visual characteristics and vocal properties.

Interaction Modes

The system architecture is capable of supporting three distinct interaction modes:

- Voice-to-text (user input via speech with text response display)

- Text-to-voice (text queries with spoken avatar responses)

- Voice-to-voice (real-time spoken interaction with no visual output)

Voice-to-voice is simply a combination of the first two modes, and it was selected as a default interaction mode for the Symposium. Other modes were hidden, but could easily be activated via the settings menu. In other words, a text chat window could be displayed for manual user queries, but this was mainly used for debug purposes. Voice-to-voice was the most complicated mode and required additional attention to latency, speech clarity, and conversational brevity. The main challenges included:

- Filtering out the actual user utterance from the background noise

- Keeping smooth and logical conversation in case of multi-utterances, continued talking with slight delays, or a new conversation beginning while the avatar responds to the previous one.

- Minimizing the time for speech recognition and response generation

Grounding & Knowledge Structure

To ground the avatar’s responses in accurate and relevant content, we structured the knowledge base into three thematic domains: company information, event info and entertainment (e.g., jokes and trivia). On top of that, a list of stop-topics has been added to the prompt regardless of the domain (including non-RAG case of general knowledge response) in order to manage ethical compliance. Each domain was encapsulated into a modular data source, and ARGO, our agentic orchestration framework, enabled the conversation agent to dynamically determine which source to use on a per-query basis.

The datasets for each domain varied in size, with company and event content comprising the largest chunks. This required targeted optimization to balance semantic coverage with retrieval performance. The largest datasets exceeded 150 chunks, while the fun domain and stop-topics dataset remained lightweight by design.

The special prompt ensured the avatar communicated in short messages, as well as friendly and natural tones. It was configured to avoid emojis, formatting, or long monologues—crucial considerations in a voice-only setup displayed on a large screen in the venue’s main hall.

Besides the knowledge base structure, a proper prompt engineering was required to tune the user intent classification used by the conversation agent to select the right knowledge dataset.

Admin Interfaces

The Prompt Engineering UI enabled authorized team members to manage and update prompt logic, knowledge base content, and agent tools in real time. In parallel, an Operational Admin Panel was embedded—hidden but accessible—within the avatar display screen. This control panel allowed event staff to adjust microphone sensitivity, switch display layouts, toggle visible text for recognized/synthesized speech, and manually reset the session.

Testing & Evaluation

During testing, the team leveraged our proprietary GenAI Evaluation Tool. This allowed for extensive testing of the avatar’s behavior, tone, accuracy, and responsiveness across a wide range of inputs.

The evaluation dataset consisted of over 450 test prompts across all four knowledge domains, including multilingual queries, restricted-topic violations, and edge cases. Each prompt was tested against key nonfunctional criteria—latency, grounding accuracy, classification fidelity, tone consistency, and language switching. This comprehensive testing helped validate the system’s performance across categories such as company services, event logistics, trivia, and general knowledge.

After completing internal evaluation cycles, the team conducted real-world simulation testing, followed by on-site validation.

Real-world conditions were simulated using ambient background noise—played from YouTube channels mimicking event environments—to test speech recognition performance. This noise simulation was key to ensuring the avatar remained accurate and responsive even in a crowded setting. The final stage of implementation involved on-site testing conducted by the company’s Principal Engineer, who validated system behavior in the actual venue before the event went live.

Additionally, Langfuse was integrated into the backend to track complex interactions, prompt routing, and tool executions. This monitoring allowed the team to observe agent behavior, identify anomalies, and apply live optimizations with trace-level visibility.

Outcomes

The avatar was deployed on the main day of the GenAI Symposium and successfully supported real-time conversations with attendees in multiple languages. It remained live and operational for over 9 hours, maintaining an average latency of under three seconds and a high standard of engagement throughout the day. Guests interacted with the avatar to learn session times, get directions, access more information about the company’s offerings, and enjoy casual, friendly exchanges.

The admin tooling and observability stack—including Langfuse—enabled fine-tuned control during the live event, contributing to system reliability and high responsiveness throughout the day.

Feedback from both clients and internal staff was overwhelmingly positive. The avatar was praised for its natural tone, quick responses, and its ability to engage users in short, upbeat conversations. It also served as a powerful visual demonstration of the company’s AI capabilities—positioning them not just as a software vendor, but as an innovator in human-centered AI experiences.

Future Considerations

Looking ahead, the team identified several enhancements to further elevate the avatar experience.

- Adding dynamic time-awareness so the system can adapt to the current moment without manual updates,

- Implementing a generative small-talk module to reduce repeated phrases

- Expanding deployment beyond events into environments like office lobbies and digital kiosks

- Expanding to accommodate multi-party discussions

- Leveraging the existing speaker diarization feature within the agent’s logic

The project demonstrated how voice AI can become an integral part of physical event spaces—delivering real-time support, personality, and value while showcasing the very best capabilities of intelligent software.

Production Readiness and Tuning Powered by MAIS

Moving a successful proof of concept to a scalable, production-ready system requires a dedicated focus on reliability, performance, and ongoing maintenance. This is where a Managed AI Service (MAIS) becomes crucial. Instead of a one-time deployment, the MAIS approach ensures the system is not only deployed but also continuously optimized for real-world use.

The MAIS team is responsible for managing the underlying infrastructure, guaranteeing high availability and low latency—critical for a live conversational system. They handle the production readiness aspects, including scaling resources to meet demand, implementing robust monitoring and alerting systems, and ensuring security protocols are in place.

Furthermore, the MAIS partnership facilitates continuous tuning. By analyzing user interaction logs and performance data, the team can identify conversational patterns and areas where the model can be improved. This allows for a proactive and iterative process where the model is fine-tuned to enhance conversational flow, reduce errors, and ensure accuracy, thereby guaranteeing a high-quality user experience over the long term. This continuous feedback loop transforms the initial deployment into a living, evolving solution that maintains its effectiveness and relevance.

To speak with someone on our AI avatar implementation team, click here.