AI Assistant with Domain-Tuned Answers: A Scalable RAG Solution in Production

Challenge

A leading audio systems manufacturer required an AI-powered assistant capable of handling diverse requests—retrieving product specifications, accessing technical design files, answering customer questions, and supporting sales representatives. While naive RAG pipelines are common, they treat every query the same way, making them unsuitable for tasks like detailed acoustic advice or product performance calculations. The challenge was to design a solution that combined flexibility, accuracy, and scalability. Additionally, the assistant needed to support agentic AI decision-making, include fallback logic, and provide observability under a managed framework to ensure enterprise-grade reliability.

Key Benefits:

Agentic AI decision-making – Routes queries intelligently across LLMs, databases, or tools.

Scalable RAG pipeline – Fetches domain-specific knowledge with tuned chunking.

Fallback logic and chaining – Ensures reliable results for complex queries.

AI observability with MAIS – Monitors performance and maintains compliance continuously.

Enterprise-grade security and governance – Ensures data safety under managed infrastructure.

Faster deployment cycles – Delivered a functional production-ready PoC in weeks.

Approach

Our team designed a domain-tuned agentic RAG pipeline built on LangChain with Azure OpenAI GPT-4o. The system applies an agentic architecture where queries pass through an orchestration layer that decides the best execution path. Depending on the query, the agent can:

- Forward general queries to the LLM for natural responses.

- Access the company’s vector database for detailed product specifications and documents.

- Invoke external tools or APIs for calculations and precise operations.

To enhance reliability, fallback logic and query chaining were embedded—ensuring queries that fail one pathway are rerouted. Observability, managed under Managed AI Services (MAIS), provides real-time monitoring, auditing, and performance tracking, ensuring the system remains trustworthy and compliant. Regular updates to the vector database ensure responses remain fresh and aligned with the latest business information.

Solution

The deployed AI assistant delivered scalable, domain-tuned answers in real production settings. By combining agentic AI decision-making with a robust RAG pipeline, fallback mechanisms, and continuous observability under MAIS, the solution transformed how the client’s teams and customers interact with product information.

What is Agentic RAG?

Agentic RAG is an advanced AI architecture that combines the strengths of Retrieval-Augmented Generation (RAG) with an agent-based decision-making approach.

- Retrieval-Augmented Generation (RAG): RAG is a technique that enhances the capabilities of AI models by allowing them to fetch relevant information from enterprise-owned proprietary data sources before generating a response. Instead of relying solely on pre-trained knowledge, the model can query databases, APIs, or other resources to retrieve up-to-date and contextually relevant information. This is particularly useful in scenarios where the knowledge base is vast or often changing.

- Agentic Approach: The agentic aspect refers to the system’s ability to autonomously decide how to handle a user’s query. It operates like an orchestrator, determining whether to use a language model (such as Azure AI LLM) for generating a response, query an external database for factual information, or invoke a specialized service for precise calculations. This decision-making capability makes the system more flexible and capable of handling a wide variety of tasks efficiently.

Implementation Overview

The solution incorporated the following key components:

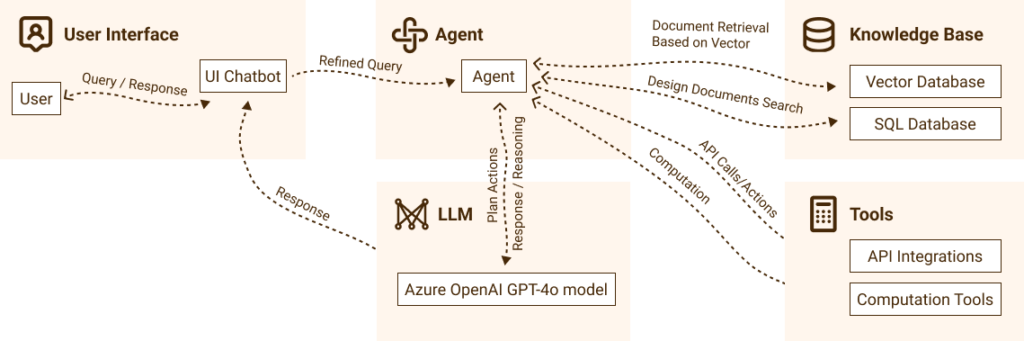

- Agentic RAG Layer: As shown in the provided schema, the Agentic RAG serves as the core decision-making unit. When a user submits a query, it first passes through the front end and then to the agent, which refines the query and decides the appropriate action. It is implemented using LangChain Python support for Agents.

- LLM (Large Language Model): The agent may direct general queries to the LLM (the Azure OpenAI GPT-4o model was selected), which generates responses based on pre-trained data. This is typically used for standard queries that do not require external data retrieval or complex calculations. This model supports Agents and Function Calling and is one of the most powerful models for this task.

- Knowledge Base Access: For queries that require specific product details or documentation, the agent accesses the company Vector Database. An additional business process was implemented to regularly update the Vector Database with the most recent marketing materials. The process know and vector calculation (embeddings) is used to index marketing information with semantic tags. These components allow the agent to fetch the most relevant and up-to-date information to include in the response. A problem domain-specific chunking strategy was applied to further improve the quality of the context of requests to the LLM.

- Tools for Precise Operations: If a query requires complex calculations or the use of external APIs, the agent redirects the query to the appropriate computation tools or API integrations. This ensures that calculations are accurate and that the information provided is reliable.

How the Architecture Works

- Query Processing: The user’s query is submitted via the front-end chatbot interface and is initially processed by the Agentic RAG. The agent refines the query, deciding which subsystem (LLM, Knowledge Base, or Tools) should handle it.

- Decision-Making:

- For general questions, the query is passed to the LLM for response generation.

- For specific product design information, the agent queries the company’s structured data in the form of an SQL database.

- For complex calculations, the agent calls upon external computation tools and API integrations.

- Response Generation: Once the appropriate data or computation is completed, the agent compiles the information and sends the final response back to the user through the chatbot interface.

Agentic RAG Schema

The schema below visually represents how Agentic RAG interacts with various components in our project (click the image to expand):

Results:

The GenAI PoC successfully showcased the following:

Precise product specifications delivered instantly from proprietary databases.

Accurate execution of complex calculations through specialized tools.

Neutral, fact-based responses to competitive queries.

Rapid PoC delivery in just two weeks, accelerating adoption.

Technologies

- LangChain-based Agentic RAG: Central decision-making AI that manages query routing.

- Azure OpenAI LLM: Language model for generating natural language responses.

- Azure Cognitive Services: Used for integrating external systems and databases.

- Custom Calculation Tools: Python code integrated for performing precise calculations.

What’s Next?

See how MAIS manages complex agentic AI pipelines.

Schedule a demo with First Line Software today to explore the scalability of RAG pipeline architectures in production environments.